Partner Panels

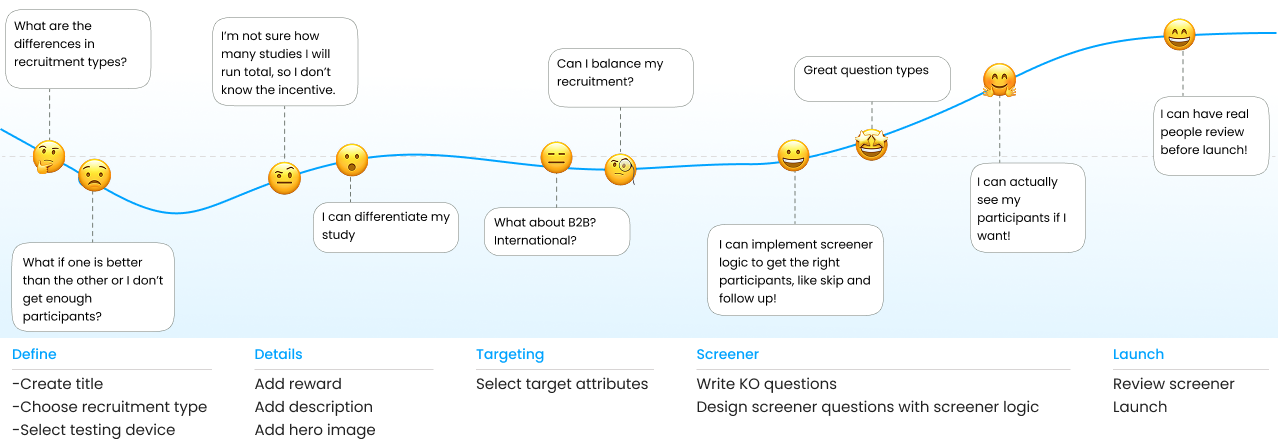

I mapped the end-to-end screener creation and recruitment flows across both Dscout and Respondent.io to identify gaps, failure points, and system-level mismatches. This surfaced critical differences that directly informed integration constraints and difficult design decisions. Some key issues are the following:

- Dscout screeners persist and can be reused across studies, while Respondent enforces a one-to-one screener-to-study relationship, limiting reuse and scalability

- Dscout allows screeners to persist and be reused across multiple studies with no expiration

- The integration had to treat Respondent screeners as disposable, not canonical

- Dscout uses estimated incentives, allowing flexibility across studies and participant invites

- Respondent requires fixed incentives tied to a specific study

- Incentive communication needed to preserve participant trust without exposing internal complexity

- Respondent requires users to choose B2B vs. B2C up front, unlocking different targeting attributes

- Dscout does not enforce this distinction, creating structural mismatches in audience definition

- This discrepancy impacted feasibility expectations and participant trust

- Respondent did not support video or photo screener questions

- Dscout relies on these question types to assess participant quality

- This constrained parity and required clear fallback logic

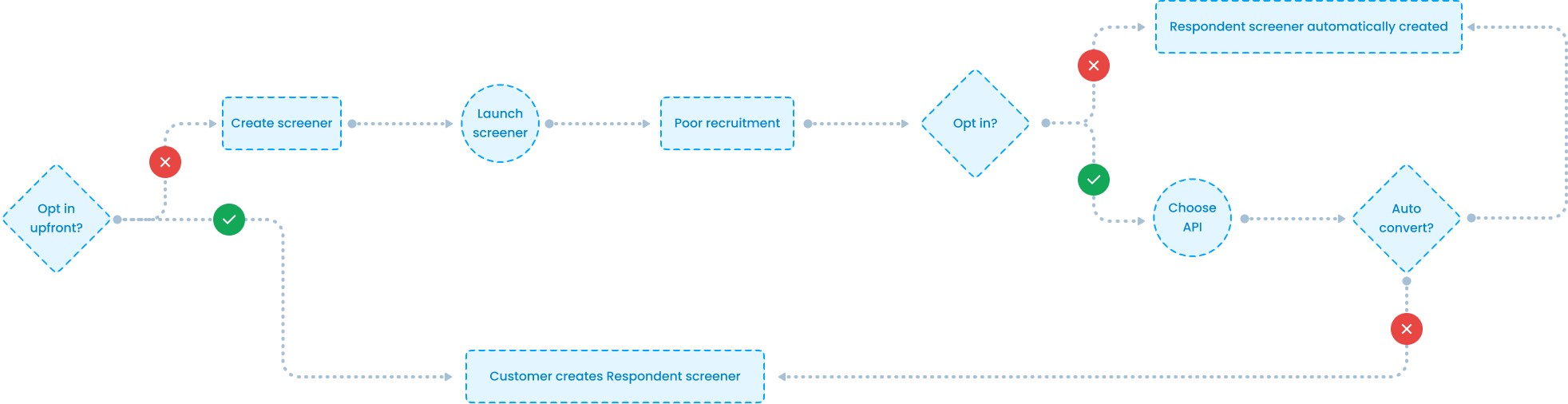

To evaluate how Respondent.io could integrate into Dscout’s recruiting engine, I mapped a decision tree outlining the possible user flows. This helped surface edge cases, risk points, and unanswered questions early, before committing to a solution. Three primary integration models emerged:

- We did not fully automate recruitment, prioritizing user awareness, trust, and cost transparency

- We did not force a single entry point, instead supporting both proactive (upfront) and reactive (supplemental) workflows

- We did not auto-migrate underperforming screeners, avoiding unexpected costs or participant limitations

- We did not make Respondent interchangeable with dscout, preserving clear behavioral differences to prevent misuse

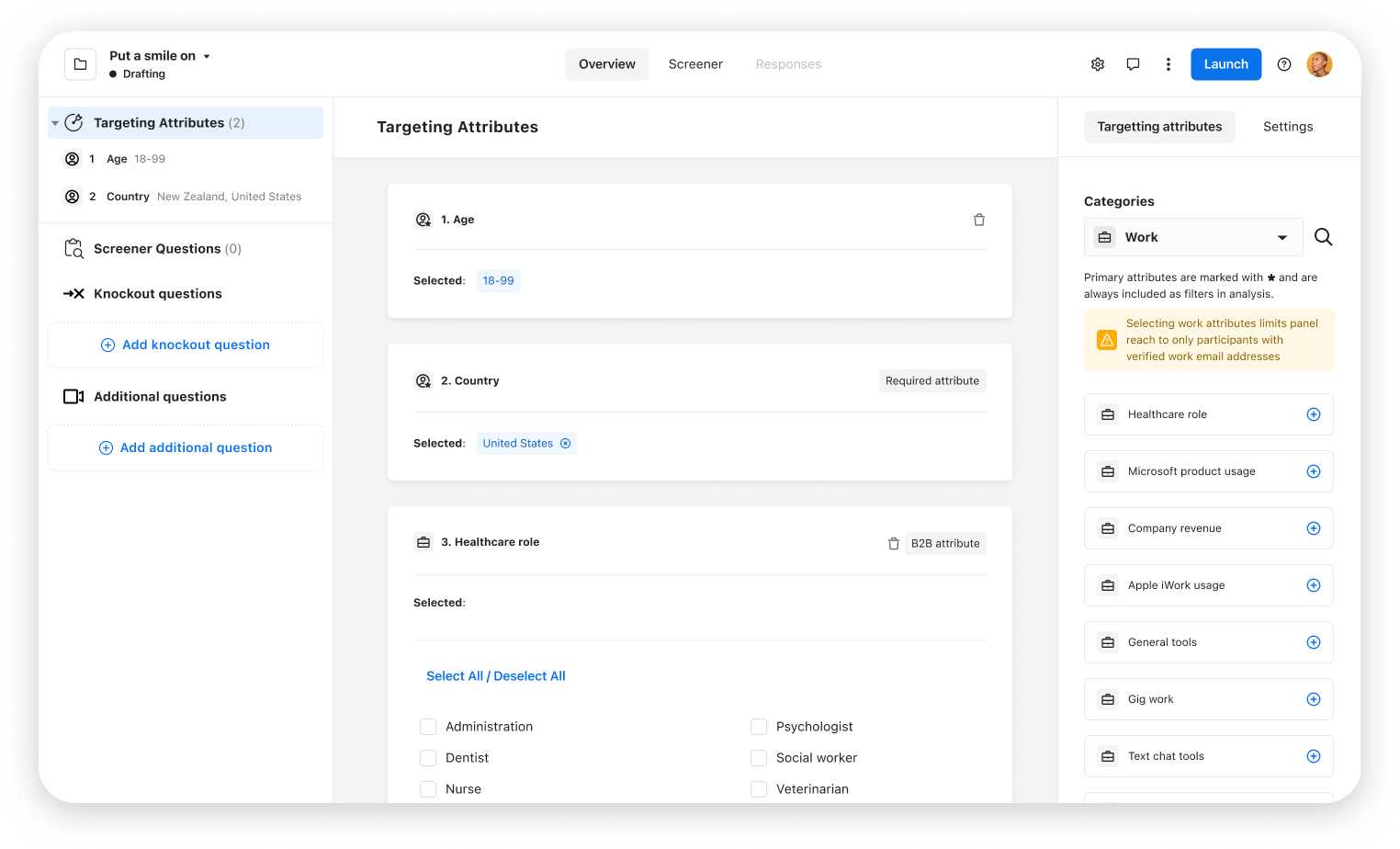

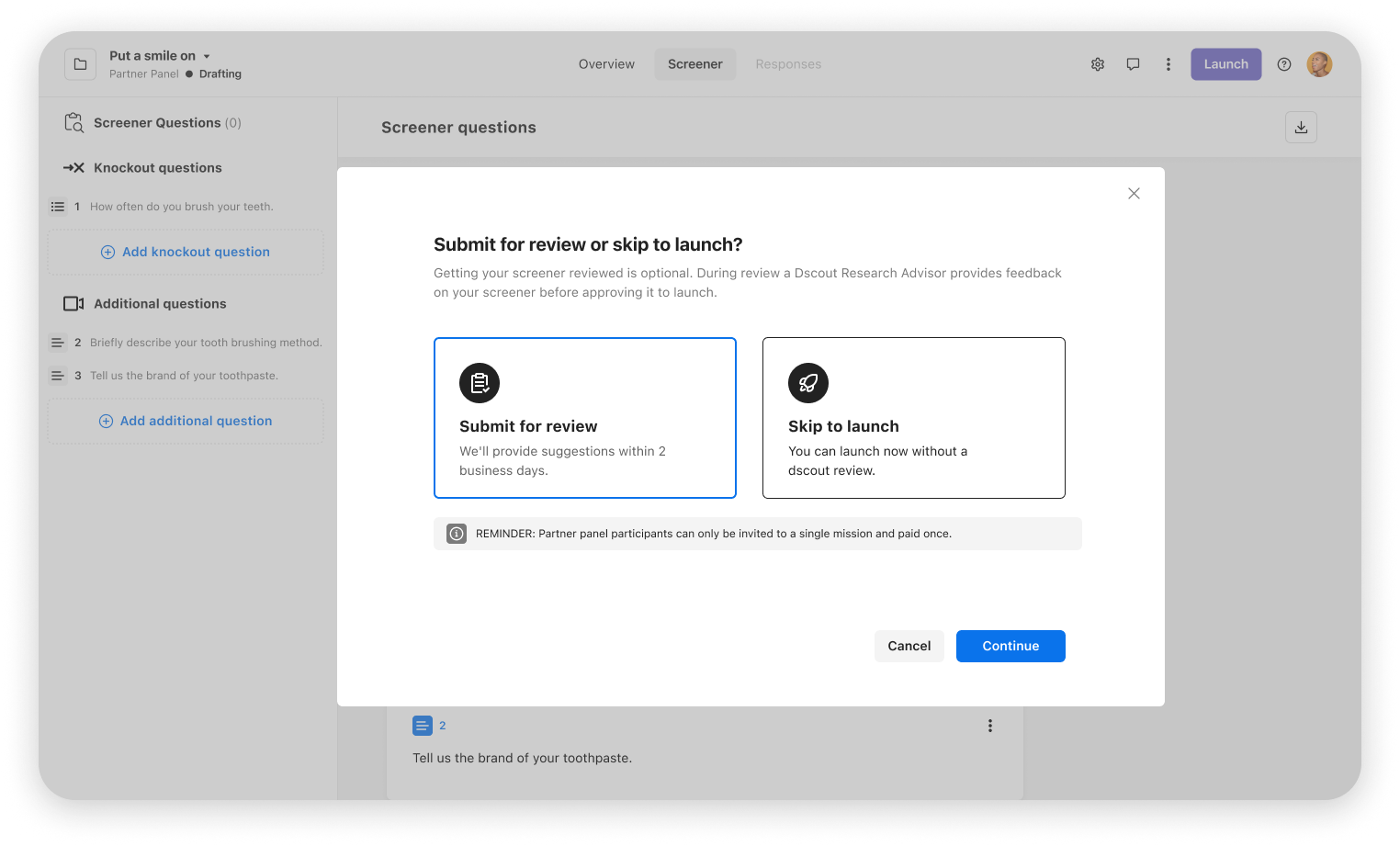

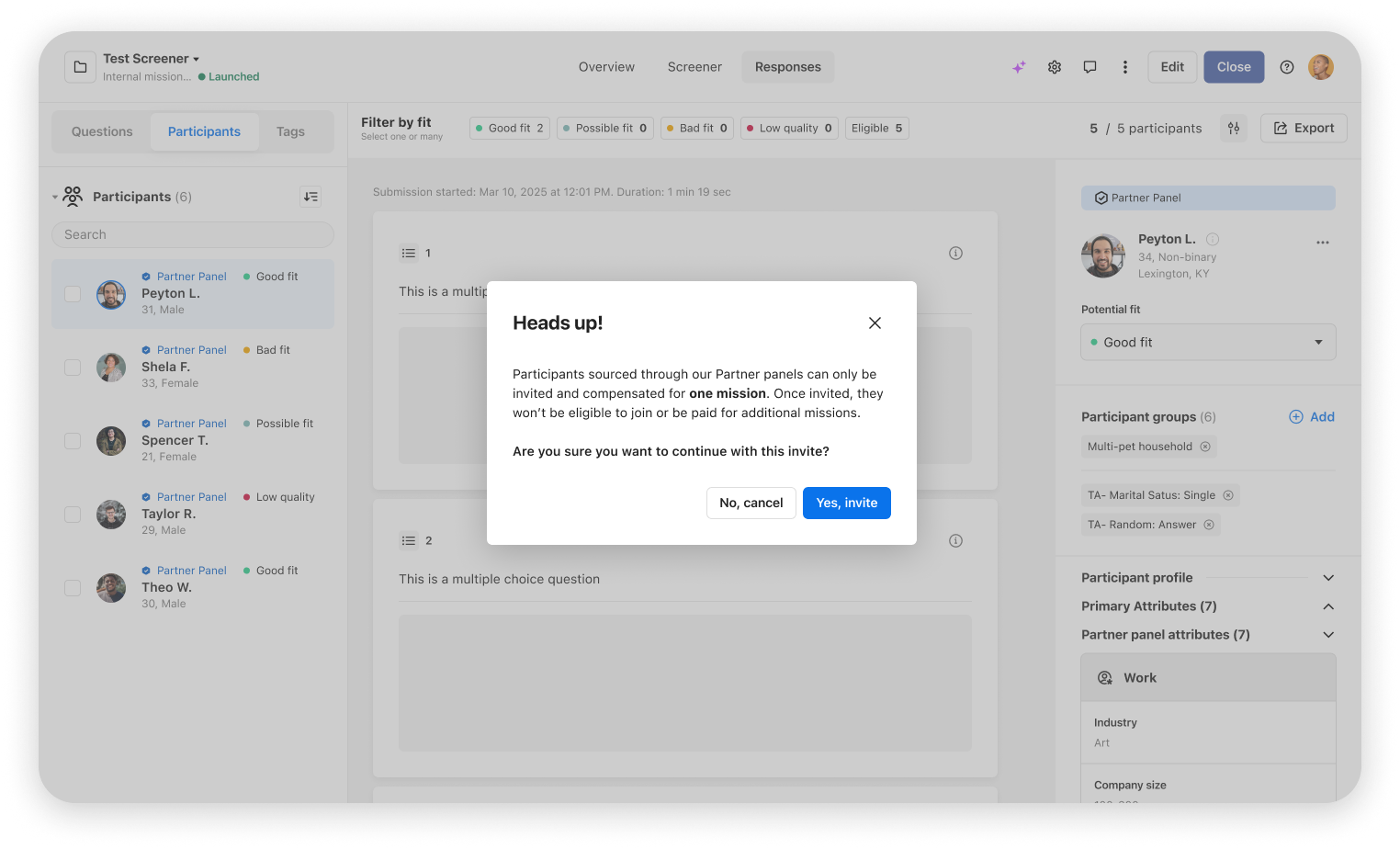

To support future recruiting sources, the integration was launched as Partner Panels, a flexible recruiting option that researchers can intentionally use either:

- Upfront, when niche recruiting needs are known

- As a supplement, when existing screeners underperform

To maintain existing mental models and minimize cognitive load:

- I used progressive disclosure to surface Partner Panel differences only when relevant

- I designed a participant tag that clearly identifies Partner Panel participants

- Key constraints (cost, participant reuse, incentive behavior) were shown contextually, not upfront

.jpg)