What its Optimizing for

Risks

Verdict

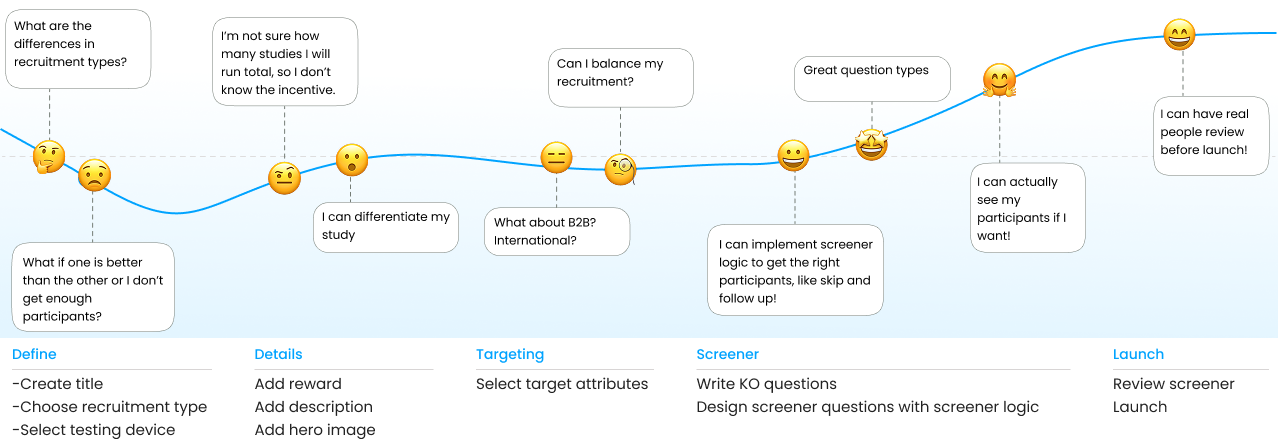

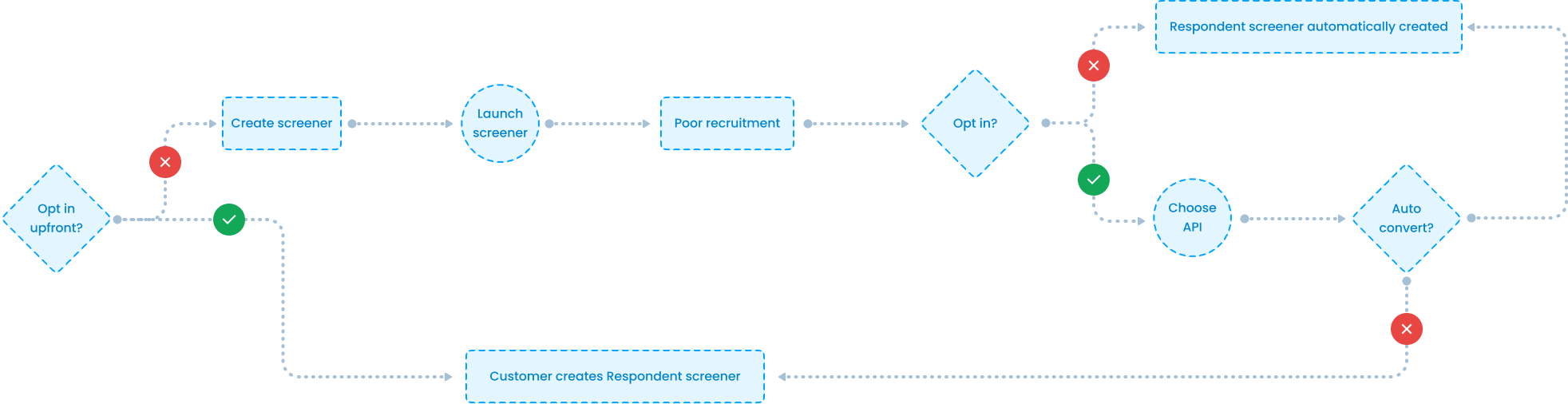

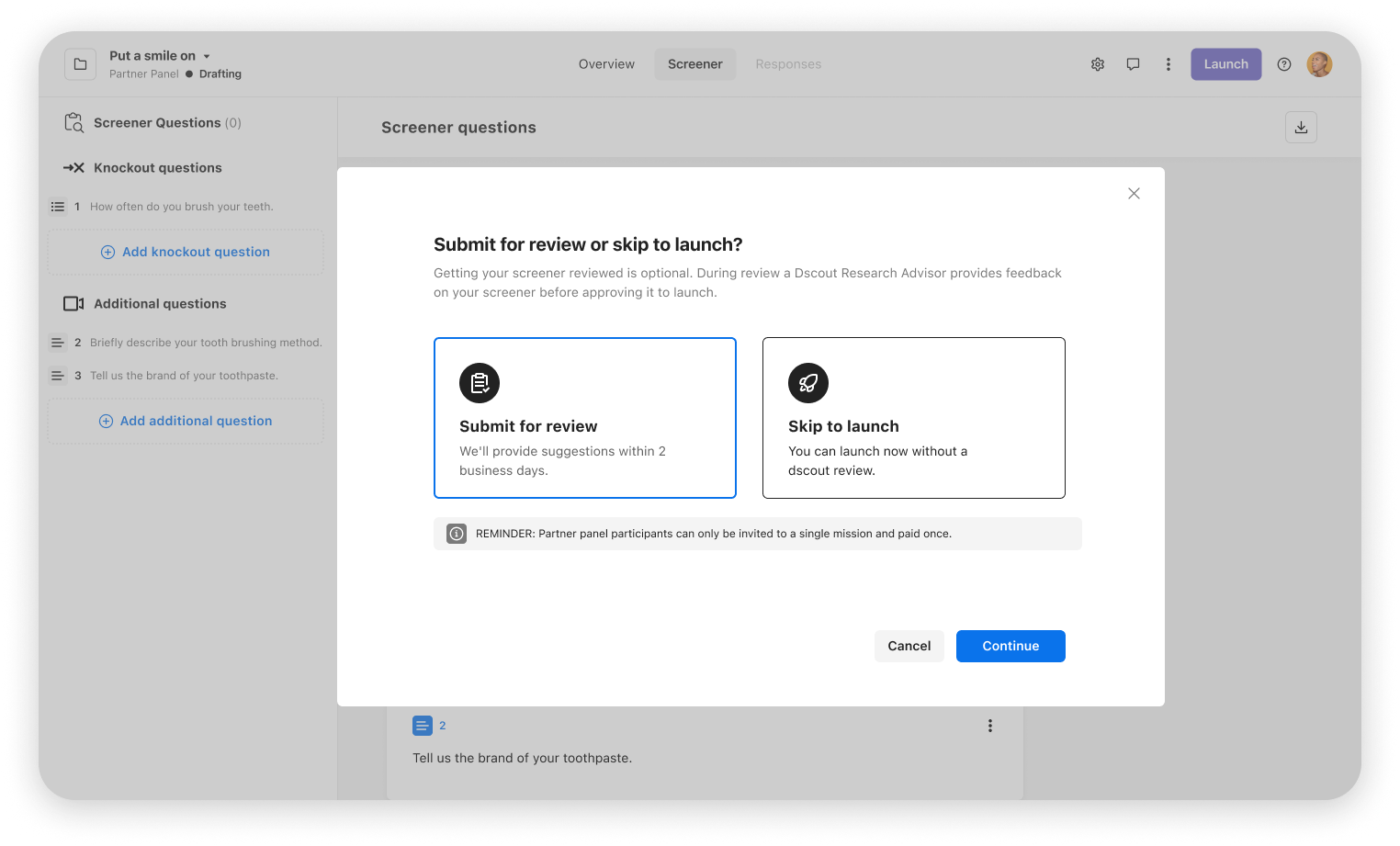

1. Respondent-First (Dedicated Screener Upfront)

Maximum transparency, operational safety, minimal ambiguity around incentives and feasibility

Added friction, vendor knowledge required, pool cannibalization

This approach was operationally safest. Respondent is an intentional recruiting path that can be used either upfront or as a supplement

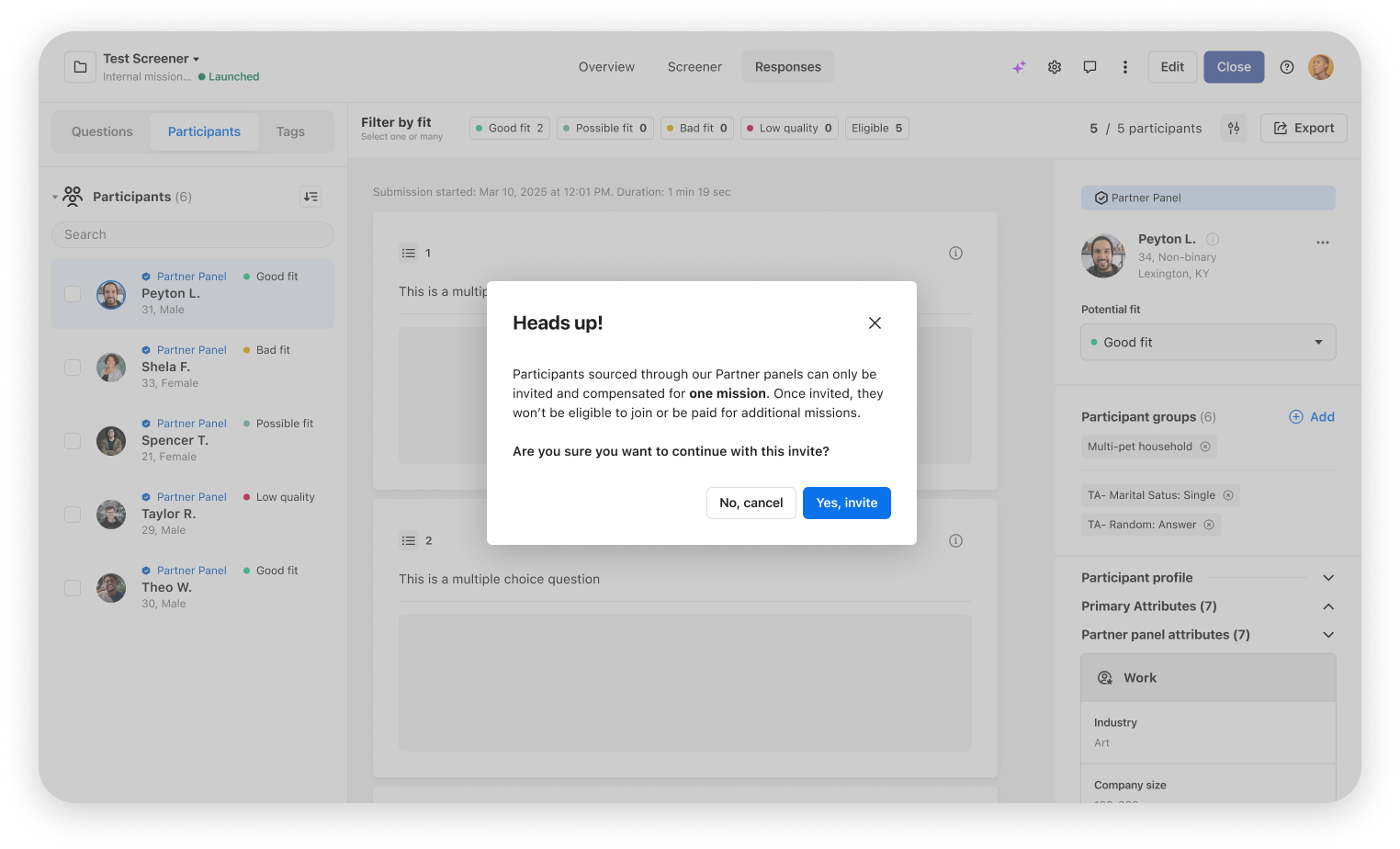

2. Deferred Opt-In (Triggered in Context)

Contextual control, Dscout-first recruiting, reduced unnecessary exposure to complexity

Mid-flow complexity, trust risk during active studies, explaining behavioral differences (costs, reuse limits, incentive rules) at the right moment

This model balanced control and invisibility, but still required careful communication design

3. Full Automation (End-to-End)

Invisible experience, no user input, no new UI, Ideal customer experience if it worked

Ambiguous triggers, non-convertible screeners, unclear cost & reuse rules, hard to communicate participant limitatins

While ideal in theory, full automation introduced too much ambiguity and risk without strong guardrails. Again, full parity isn't feasable.