Survey Platform

I led discovery with 16 suppliers and panel partners, including the owners of Points2Shop (pictured above) to validate assumptions and understand systemic constraints. Interviews focused on traffic patterns, onboarding workflows, branding needs, and engagement challenges. I synthesized findings using affinity mapping to identify themes that could inform long-term strategy. The following findings shifted the effort from a UI refresh to a platform-level rethink around time cost, trust, and perceived value.

- Mobile traffic dominated, but the experience was desktop-only

- Panels lacked visual differentiation, weakening brand trust and recognition

- Profiling exceeded 100 questions, with frequent duplication across surveys

- Long onboarding created early drop off and suppressed repeat participation.

To ground the vision in real behavior, I framed the experience around three primary motivations rather than demographic segments. These archetypes were synthesized from interview patterns, observed behavioral signals, and domain knowledge from product and design leadership rather than formal persona research.

- Large portions of the navigation had zero usage (highlighted in red rectangles above)

- High value tasks were scattered across unrelated sections

- Usage patterns varied significantly by role

This gave us the confidence to simplify aggressively without fear of breaking real workflows.

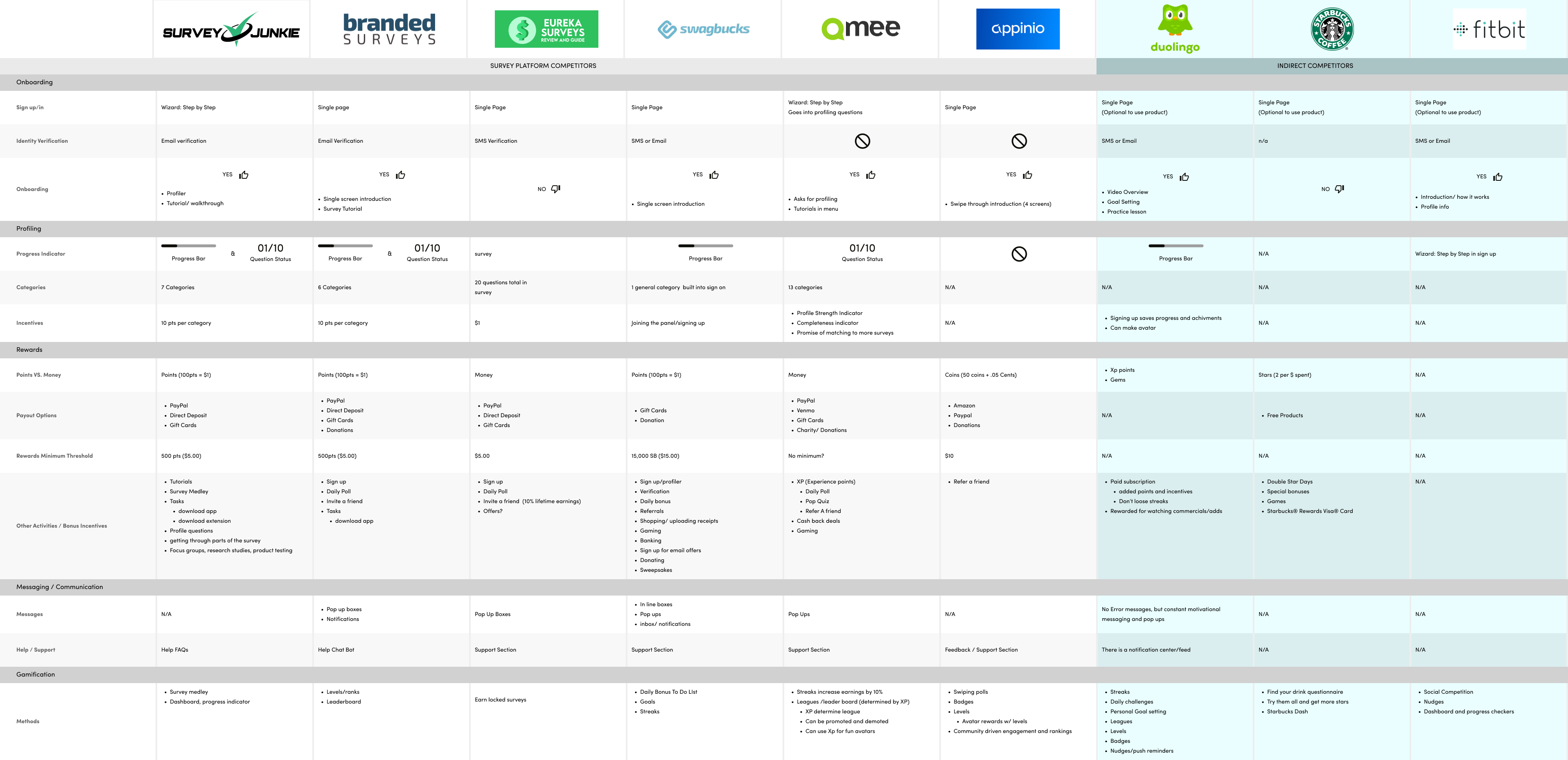

To shape the north star vision, I conducted a detailed review of direct survey platforms and adjacent engagement products. I evaluated onboarding flows, profiling methods, reward models, gamification strategies, and messaging/communication mechanics. The goal was to identify patterns, uncover gaps, and highlight opportunities for differentiation. These are some strategic insights:

- Onboarding: Most platforms rely on single-page sign-ups or step-by-step wizards, but few provide guidance or time transparency, leading to early drop off.

- Profiling: Survey progress indicators are inconsistent. Lengthy profiling or unclear categories reduce survey completion.

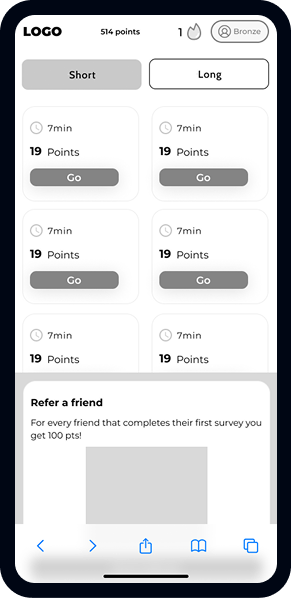

- Rewards: Direct payment is common, but point-based systems tied to milestones, streaks, or levels are emerging as engagement levers.

- Gamification: Elements like streaks, leaderboards, and XP-based progression are effective at sustaining retention, particularly for users with short attention spans or competitive tendencies.

- Communication: Motivational messaging and real-time feedback are underutilized. Clear, timely prompts could reinforce user behavior.

By aligning best practices with user archetypes, this analysis helped translate research into a cohesive, forward-looking design vision and provided a foundation for roadmap prioritization. These insights guided the north star strategy by highlighting opportunities to:

- Introduce time-based survey selection to reduce abandonment

- Simplify progressive profiling while maintaining data quality

- Enable flexible branding for panel differentiation

- Build lightweight gamification that rewards ongoing participation

- Use motivational messaging and feedback loops to strengthen retention

The core strategy was to reduce uncertainty and increase perceived control. If users understand how long something will take, why it matters, and what they gain, they are far more likely to engage and return. At the same time, suppliers needed flexibility to differentiate their panels without creating custom builds or added maintenance.

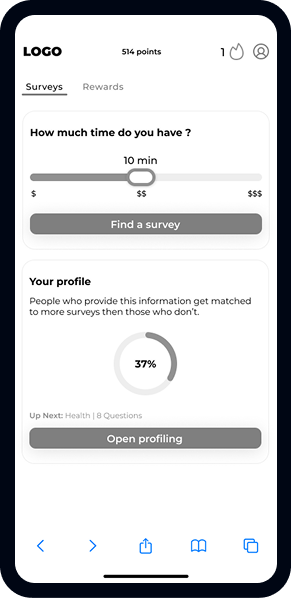

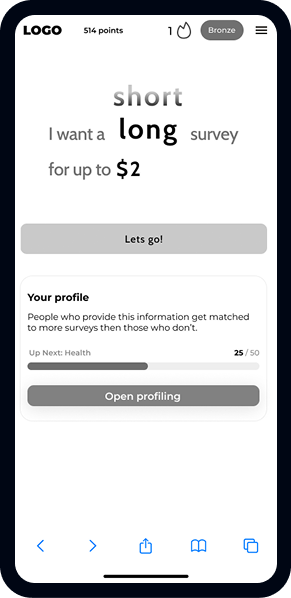

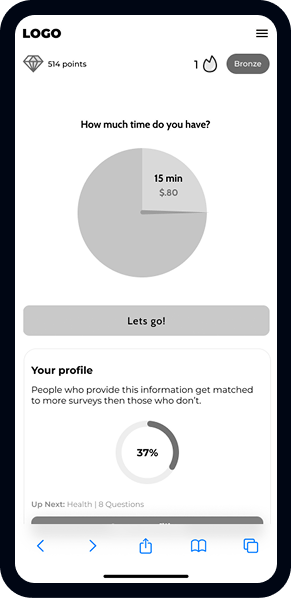

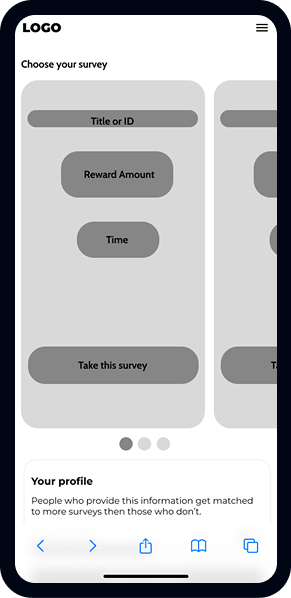

I introduced a time selection model that allows users to indicate how much time they have available. The system surfaces surveys that fit within that window, setting expectations upfront and establishing a clear value exchange. This concept informed future prioritization around time transparency and survey predictability.

To address brand dilution, I designed a flexible theming system that supports multiple brand colors and custom illustrations in defined areas of the portal. This provided a framework for partner differentiation that could be incrementally adopted without bespoke builds.

Working closely with Product, I reimagined profiling as a progressive system focused on the highest-signal questions. A visible progress indicator reframed profiling as a finite task and connected completion to better survey matching. This concept influenced roadmap discussions around data strategy and onboarding simplification.

I proposed an engagement layer built around XP, leagues, and streaks. Rather than gamifying surveys themselves, this system rewarded participation across the ecosystem and reinforced return behavior. The framework became a reference point for future retention initiatives.

The north star flow included end-of-survey actions that encouraged continued participation and allowed users to rate surveys. This balanced supply health with participant voice and informed later exploration into quality signals and trust mechanisms.

.jpg)